Qualcomm continuously delivering technology upgrades to its XR platform as glasses gradually become next-generation mobile compute devices

The long arc of Extended Reality (XR)—a term that covers augmented, mixed and virtual reality—is toward standalone glasses and headsets that deliver mobile, immersive experiences and gradually become primary mobile computing platforms. At the recent Mobile World Congress in Barcelona, the spotlight was on dynamic distributed computing to deliver a seamless user experience regardless of variability in network conditions.

Vice President of Engineering Hemanth Sampath, in an interview with RCR Wireless News, explained the primary trendline in XR: “Initially we see these glasses more as a companion to our phone or a watch, eventually transforming into the next-generation compute device where you could have integrated 5G into the AR glasses.”

In terms of delivering mobility and consistent user experiences, Sampath discussed how Qualcomm is thinking about managing variations in throughput, network load and RF conditions without compromising on-device performance. “What’s going to be very important is for the applications to be able to dynamically adapt to these changing…conditions. And we make that happen with our on-device [application programming interfaces]. Our on-device APIs provide real, up to date information about the network and allow applications to dynamically change. We also have a suite of APIs…that’s continuously optimizing to deliver the lowest latency experiences.”

In addition to using APIs for application and network optimizations, Qualcomm’s approach to dynamic distributed computing involves shifting heavy processing like graphics rendering from the device to the cloud depending on the quality of the cellular network connection. Sampath explained the “trade-offs” as “when you essentially run it on the cloud, you burn less battery. You also take advantage of the higher-powered graphics. And when you bring the compute over to the phone, you have more battery consumption on the phone.” All told, he said, the approach “deliver[s] a very seamless end-user experience.”

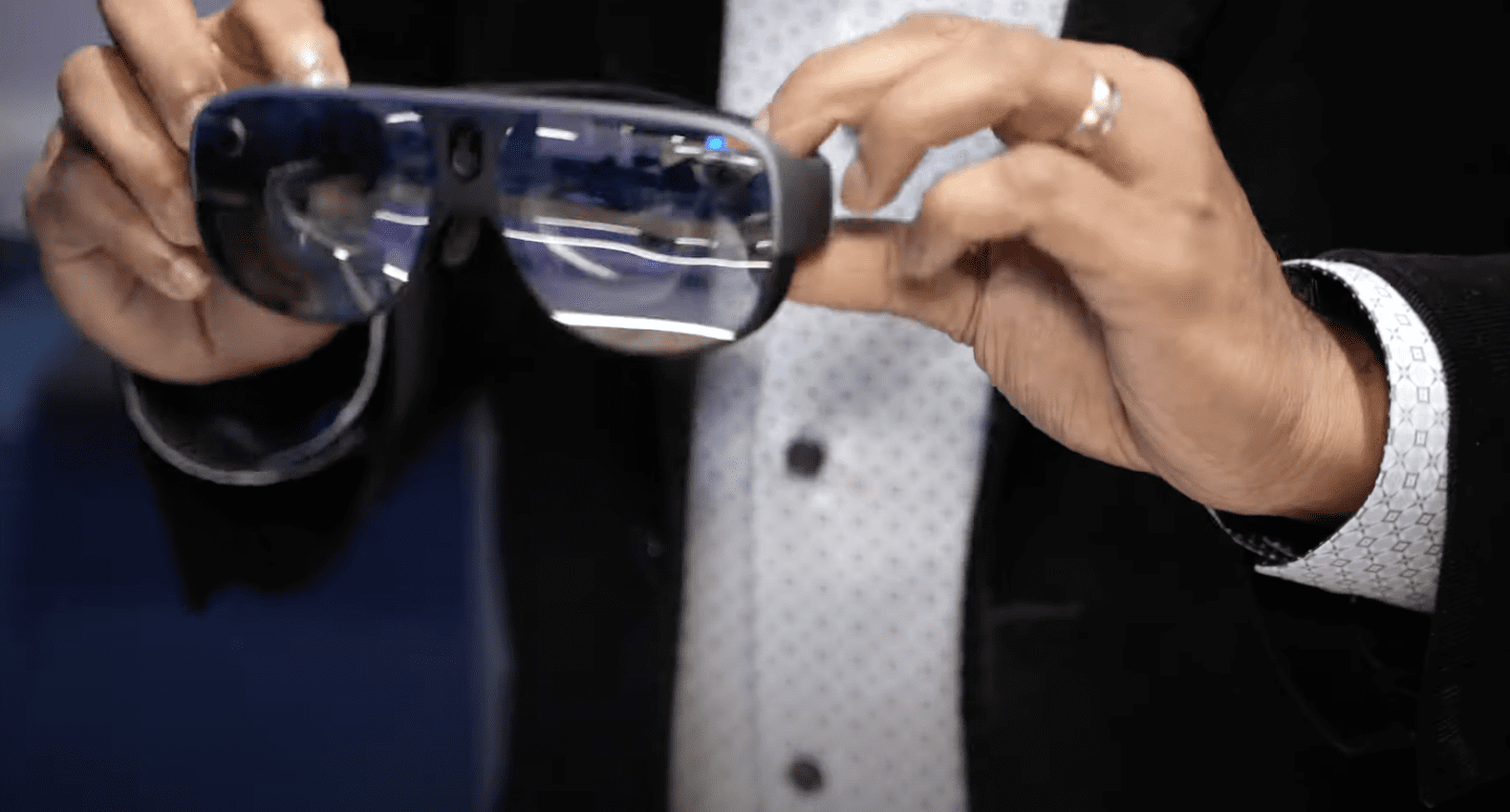

During Mobile World Congress, Sampath was demoing a collaboration with Ericsson and Hololight. Using a sub-7 GHz 5G network built on Ericsson infrastructure, Qualcomm connected a glasses form factor reference design using the Snapdragon AR2 Gen 1 platform and companion Snapdragon-powered mobile handset platform. Hololight provided a streaming AR application developed using the Snapdragon Spaces XR developer platform. The demo shows how dynamic distributed compute allows the application to toggle between remote graphics rendering in an edge cloud to local rendering on the device as load is added to the network. The edge server and handset sync across the 5G link and exchange application state information which is used by the device to select remote or local rendering.

Back to the big picture, Sampath gave the example of the Ray-Ban Meta smart glasses powered by the Snapdragon AR Gen1 Platform; the glasses take photos and videos, deliver immersive audio, livestream to Instagram, and the user can interact with Meta AI through a voice interface. While devices like these rely on a handset for connectivity today, more and more features will gradually be implemented on the glasses. And, “Longer term we will see this be a standalone 5G integrated device that may not need a companion device, that talks directly to the network.”

For more on Qualcomm’s advanced wireless R&D, read the following:

- Qualcomm SVP talks wireless R&D philosophy, priorities

- Giga-MIMO is the foundation for wide-area 6G

- Enhancing vehicular safety and experience with cloud/connectivity

- How can operators benefit from digital twin networks?

- Access to more spectrum means more economic and national security

- Qualcomm focused on making 5G mmWave more cost effective